Mirroring Tokopedia Live Shopping to Instagram Live

September 7, 2024Background

In the world of live streaming, RTMP (Real-Time Messaging Protocol) plays a critical role in ingesting live video feeds into a platform. For those managing this infrastructure in Google Cloud Platform (GCP), distributing RTMP traffic evenly across multiple instances can be surprisingly difficult.

In one of my past projects, we encountered a serious load balancing issue when handling RTMP traffic using GCP’s External TCP Load Balancer. Despite configuring standard load balancing rules, some Compute Engine instances became overloaded while others sat nearly idle. This uneven distribution caused degraded stream quality, performance issues, and occasional ingestion failures.

Understanding the Problem

GCP offers several traffic distribution strategies through its load balancers. For our RTMP ingestion service, we initially used the Global External TCP Load Balancer, assuming it would evenly distribute incoming streams across the backend Compute Engine instances. However, this assumption failed to account for RTMP’s long-lived connection nature — each connection persists until the broadcaster ends their stream.

Since standard load balancers focus more on distributing new connections, and not actively shifting existing ones, this led to severe imbalances where some VMs were overloaded while others remained almost idle. This “hotspotting” forced us to overprovision the entire cluster to avoid choking critical instances.

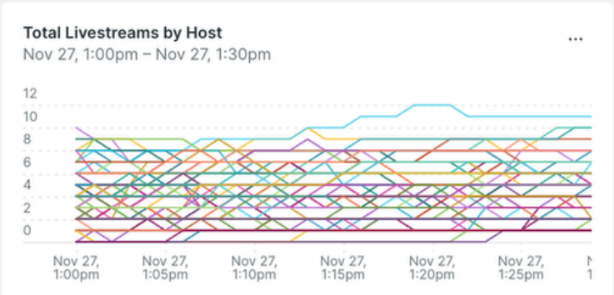

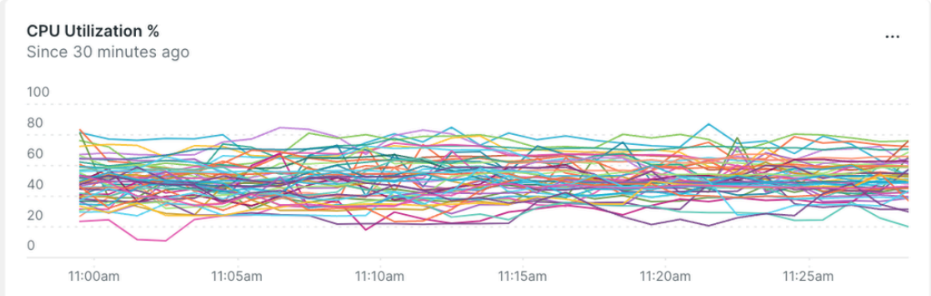

Below is a dashboard screenshot showing this uneven CPU utilization before optimization and number of active RTMP connections.

|  |

Why RTMP Service Needs Special Treatment

Unlike HTTP-based workloads where each request is independent, RTMP relies on persistent TCP connections. Once a client connects to a VM, the connection remains pinned to that instance until the stream ends — no matter how long it runs.

This means that even if a load balancer distributes new connections evenly, there’s no mechanism to rebalance existing connections if certain VMs accumulate disproportionately more traffic. Standard load balancers (including GCP’s) have no visibility into actual instance load, unless you explicitly tell them via health checks — which is the basis of the hack we applied.

The Infrastructure Hack: Dynamic Health Check Tuning

To mitigate this, I applied a simple but effective hack:

👉 Dynamically adjusting the health check response based on real-time connection count inside each VM.

Understanding Health Checks in Google Cloud

GCP uses health checks at two critical layers:

| Type | Purpose |

| Load Balancer Health Check | Ensures the load balancer only sends traffic to healthy VMs. If a VM responds unhealthy, it’s excluded from new connection assignments. This does not restart the instance itself. |

| Instance Group Health Check | Managed Instance Groups (MIGs) can automatically replace VMs that fail health checks. This is about self-healing, not traffic steering. |

Focus for This Hack: Load Balancer Health Check

This hack targets the Load Balancer Health Check since we want to influence traffic flow — not VM lifecycle. The load balancer’s backend service defines this health check, and it actively determines whether each VM is eligible to receive new RTMP connections.

How It Works

Each VM (running the RTMP ingest application) tracks its own current active connection count. The logic works like this:

- If the connection count exceeds a predefined threshold, the health check handler responds with 503 Service Unavailable.

- The load balancer immediately deprives that VM from receiving new connections.

- Once load drops back to a healthy range, the handler responds with 200 OK, making the VM available again for new streams.

This is effectively a self-regulating feedback loop — the instance tells the load balancer when it’s saturated, keeping traffic more evenly balanced across all instances.

Consideration: Tuning Health Check Intervals

For this to work effectively, health check frequency matters.

The default GCP health check interval (30-300 seconds) is too slow for dynamic streaming workloads.

In this case, we lowered the health check interval to 2 seconds — meaning the load balancer checks instance health every 10 seconds, reacting far more quickly to load spikes.

This faster feedback helps the system respond gracefully to traffic bursts, rather than piling new connections onto already stressed VMs.

Results

This small adjustment delivered huge savings:

- Compute instance count reduced from ~120 to 60-70.

- Monthly compute costs dropped by nearly $20,000.

- CPU utilization across instances became far more balanced, eliminating hotspots.

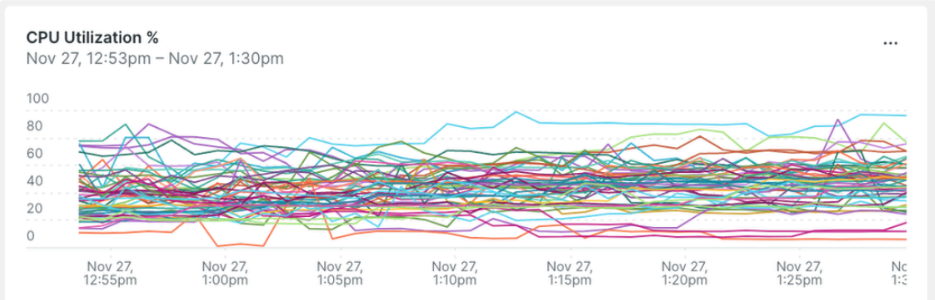

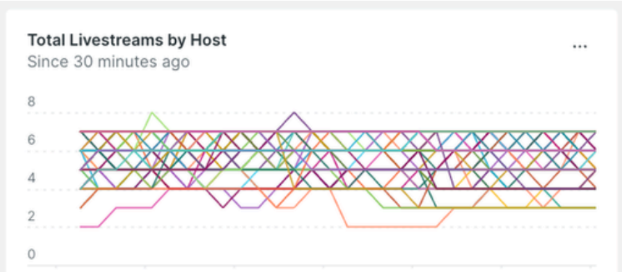

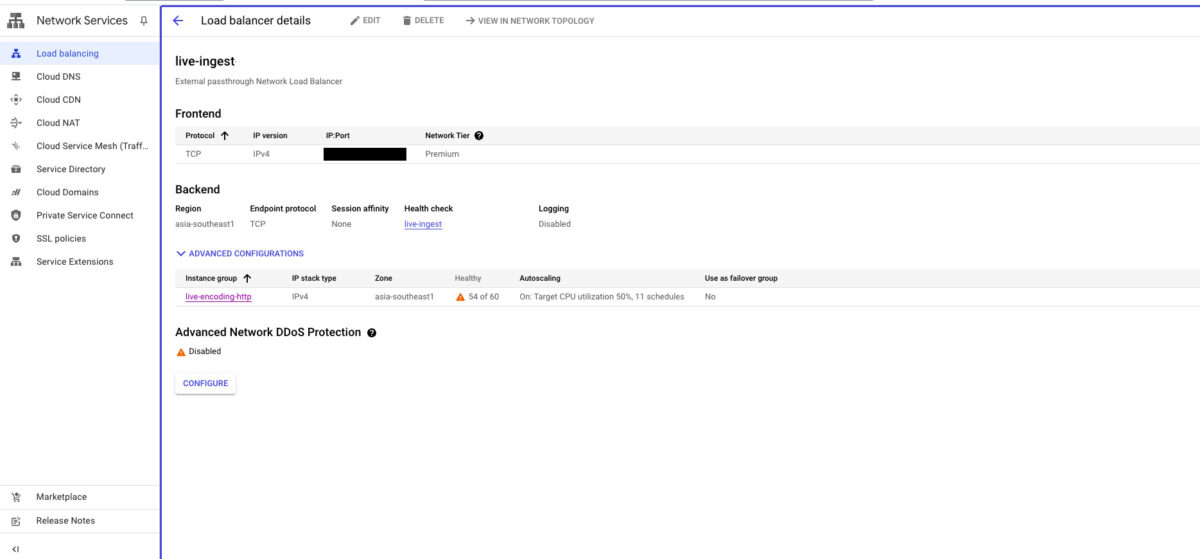

Here’s the same dashboard after deploying this optimization

|  |

And do not panic when you see health check failures in the GCP load balancer.

When Does This Hack Work Best?

This approach is ideal for: ✅ Long-lived connections, such as:

- RTMP ingest servers

- WebSockets

- Persistent TCP tunnels

✅ Predictable per-instance capacity (after load testing), so you know the maximum safe connection count per VM.

🚫 It’s unnecessary for stateless, short-lived traffic (like HTTP APIs), where standard load balancing works fine.

Other Approaches

While this health check hack proved highly effective, there are other architectural approaches that could have addressed the same issue. In fact, I seriously considered introducing a connection-aware proxy layer using HAProxy or Envoy. However, at that time, our primary objective was to reduce compute costs quickly, with minimal changes and the shortest possible timeline. Given those constraints, leveraging GCP’s native health check mechanism offered the fastest path to impact, making it the right choice for that moment.

That said, each approach comes with its own set of trade-offs:

| Approach | Pros | Cons |

| Dynamic Health Check Hack (This Article) | 1. Extremely fast to implement. 2. Leverages existing GCP infrastructure. 3. Requires no additional components or proxies. 4. Directly reduced compute cost by ~45%. | 1. Health check behavior is GCP-specific, making it harder to reuse if you migrate clouds. 2. Limited visibility into actual traffic flow — you rely on indirect signals (connection count). |

| Connection-Aware Proxy (HAProxy/Envoy) | 1. Completely decouples application logic from traffic management. 2. Full real-time visibility into each RTMP connection. 3. Works across multi-cloud and hybrid environments. | 1. Introduces another operational layer — proxy itself needs scaling, monitoring, and HA setup. Means need to maintain the proxy 😢 2. Requires time to design, build, and tune — slower time-to-market compared to a quick health check hack. |

In summary, the health check hack is an short-term optimization that works especially well if you want quick cost savings inside GCP. However, for long-term flexibility — especially if you foresee migrating to a multi-cloud or hybrid environment — investing in a connection-aware proxy gives you far greater control and portability.

Lessons Learned

This experience highlights the importance of understanding traffic patterns when designing infrastructure. For live streaming ingestion services, persistent connections like RTMP demand smarter load management than simple round robin.

If you are building RTMP ingest clusters on GCP, and you want to avoid uneven utilization, consider integrating load-aware health checks into your design.

Reference Links

For anyone wanting to dive deeper into GCP’s health check and load balancing mechanisms, these official docs are invaluable: